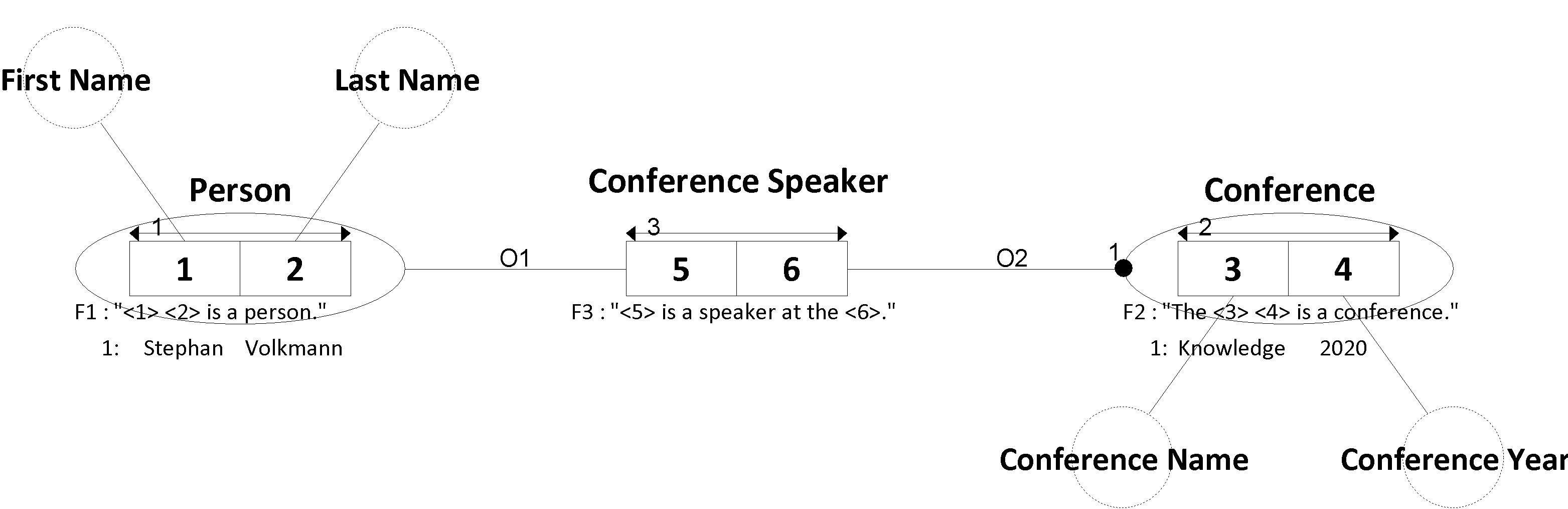

I, Stephan, am very happy that I'm invited to give a presentation at the Knowledge Gap 2020 in Munich.

My presentation is about advanced techniques in Fact-Oriented Modeling.

Often data models are built with a technical focus, because they need to be delivered fast or must meet various technical requirements. Therefore, the business aspect and the meaning of objects and relationships are swept under the table. But then the business domain later hardly understands the data model and has problems to work with it in own applications or reports – which often results in a redesign of the data model and renewed time and cost expenditures.

An EXASOL Webinar serie

We are back again after a long time, with a new webinar. The last one, we (Mathias and I) did together is almost four years ago. Time flies by! What's up this time?

The fictitious company FastChangeCoTM has developed a possibility not only to manufacture Smart Devices, but also to extend the Smart Devices as wearables in the form of bio-sensors to clothing and living beings. With each of these devices, a large amount of (sensitive) data is generated, or more precisely: by recording, processing and evaluating personal and environmental data.

I am pleased to say that I will be participating at this year’s Enterprise Data & Business Intelligence and Analytics Conference Europe 18-22 November 2019, London. I will be speaking on the subject ‘From Conceptual to Physical Data Vault Data Model’ and (for sure) on my hobby horse subject temporal data: ‘Send Bi-Temporal Data from Ground to Vault to the Stars’. See my abstracts for the sessions below.

OK, for those of you who just want to grab a promotion code... I'll make it short 😂:

TEDAMOH

As a speaker at both conferences I give you 15% on the DMZ EU and 20% on the DMZ US. You can register here.

For those of you who are also interested in what I'm going to talk about, a few more informations:

Or the battle announcement of the incoming interface

The BI Center of Competence (CoC) has decided to use bitemporal data storage when setting up a new data warehouse for one of the business units of the fictitious company FastChageCo™.

BI CoC is well advanced in the bitemporal implementation of Data Vault database objects as well as loading patterns. The already connected systems via formally defined incoming interfaces have worked without problems so far.

In recent weeks I have read so many pessimistic and negative articles and comments in the social media about the state of data modeling in companies in Germany, but also worldwide.

Why? I don't know. I can't understand it.

I know many companies that invest a lot of time in data modeling because they have understood the added value. I know many companies that initially rejected data modeling as a whole, but understood its benefits through convincing and training.

Isn't it the case that we (consultants, managers, project managers, subject-matter experts, etc.) should have a positive influence on data modeling? To support our partners in projects in such a way that data modeling becomes a success? If we ourselves do not believe that data modeling is a success, then who does?

We, Stephan and myself, are looking forward to welcome you at the TDWI Conference at the MOC Munich from June 24th - 26th, 2019! Meet us at our booth, discuss bitemporal topics, data vault or data modeling at all with us and attend one of Dirk Lerner's lectures:

Seite 3 von 12